Energy is how Africa wins at AI

Chapter 1: The Global AI Energy Demand Explosion and Africa's Thermodynamic Advantage

Before we start: I have zero data center experience. Never built one. My cooling system knowledge comes from YouTube. But I can do arithmetic, and apparently that makes me more qualified than whoever's greenlighting these infrastructure deals.

Everyone building AI infrastructure right now is wrong. Not a little wrong. Not 'we'll optimize later' wrong. They're spending 3 - 4 trillion USD to build data centers in the stupidest possible places because nobody wants to admit that physics beats vibes every single time.

Here's the executive summary before I assault you with thermodynamics equations I learned from Indian YouTubers at 2am...

The TLDR for people who bill more per hour than I make per month:

Everyone's about to blow $3-4 trillion building AI data centers in places where electricity costs 17x more than it should because apparently nobody in Silicon Valley owns a calculator or a thermometer.

Africa has cheaper power, better cooling physics (yes, really), and enough sun to make Germany cry. The hyperscalers already know this. They're quietly buying land while everyone else is still debating whether this violates their ESG guidelines.

The math doesn't care about your feelings. Neither does thermodynamics.

FAIR WARNING: What follows is obnoxiously long and shamelessly technical. There's math I derived from first principles (googled them), charts held together by prayer that Excel barely survived creating, and enough equations to make you think I know what I'm talking about. I don't. But the numbers don't lie, even when I might.

It's way too long. I know. I don't care.

The conclusion is definitely right. If you make it through, make sure to read the disclaimer at the end.

Still reading? Masochist. Fine. Let's talk about why everyone with a data center budget is about to become very, very angry with me.

We're about to spend about 3 - 4 trillion USD building AI infrastructure in the wrong places because nobody did the thermodynamics homework. A single large model training run burns enough electricity to power a small city for months—costing 17x more in constrained grids than in energy-abundant regions.

This isn't about corporate social responsibility or helping Africa. This is about not being an idiot with electricity bills. When you need to cool 100 kW per rack in tropical heat, you stop pretending air conditioning will save you and start engineering real solutions. Those solutions end up better than anything in temperate climates because – plot twist – being forced to solve hard problems makes you better at solving problems. Who knew?

By 2030, companies still paying premium rates for compute will be as competitive as a fax machine. The math is brutal and it doesn't care about your innovation ecosystem. Energy costs compound. Physics doesn't negotiate. And thermodynamics always wins.

The Computational Arms Race Nobody Saw Coming

But first, a confession: I started this research trying to prove that Iceland, Antartica, Space etc was the future of AI. You know, cold = good for computers, right? Six spreadsheets later, I realized I was thinking like someone who learned physics from Reddit. Turns out heat isn't the enemy. Expensive cooling is.

Here's what happened while we were all arguing about ChatGPT writing college essays: The global technology industry accidentally started an infrastructure arms race that makes the 1990s datacenter boom look like a backyard barbecue.

Let me break this down with numbers that may make your CFO cry. A single training run for GPT-4 consumed approximately 50 GWh of electricity. That's enough to power 5,000 American homes for a year or 21,000 Nigerian homes for a year or 228,000 Liberian homes for a year (you get the idea). For one model. One time. And OpenAI isn't training it once—they're running multiple experiments, iterations, and variations. Conservative estimates suggest they burned through 500 GWh just in experimental runs before landing on the final architecture.

Every one of those computations, from the simplest arithmetic operation to the most complex neural network training run, represents an irreversible thermodynamic process. The Landauer principle tells us that erasing one bit of information dissipates at least kT ln(2) joules of energy, where k is Boltzmann's constant and T is the absolute temperature. At room temperature (300K), this minimum is approximately 3×10^-21 joules. Modern GPUs performing tensor operations for AI training consume roughly 10^12 times this theoretical minimum—a spectacular inefficiency that defines our current technological moment.

Translation for normal humans: We're using the computational equivalent of a Hummer to deliver pizza. In a snowstorm. Uphill. The inefficiency is so spectacular it's almost beautiful.

This inefficiency creates an arbitrage opportunity of planetary scale. The energy cost of computation varies by over two orders of magnitude across global markets, from $0.02/kWh in certain African locations with stranded renewable resources to $0.35/kWh in European industrial zones. When that single GPT-4 training run's 50 GWh requirement hits different markets, the cost differential is staggering: $1 million in optimal African locations versus $17.5 million in constrained European grids. That's $16.5 million in savings for one model iteration. Multiply this by the thousands of experiments, iterations, and production deployments planned globally, and we're examining a cost differential measured in tens of billions of dollars annually.

Training vs Inference: The Energy Consumption

Let me show you exactly how much money we're lighting on fire, broken down by category so you can pick which part makes you angriest:

Training Energy Profile:

Power density: 35-50 kW per rack (compared to traditional IT at 7-10 kW)

Duration: 3-6 months continuous operation

Utilization: 90-95% sustained (GPUs don't take coffee breaks)

Cooling overhead: 40-50% additional power for thermal management

Total facility power: 10-100 MW for a single training cluster

Let's make this concrete. Anthropic's Claude 3 training cluster (and I'm using public estimates here) likely consisted of:

20,000+ high-end GPUs (A100s/H100s)

500W per GPU at full load = 10 MW just for compute

Networking, storage, CPU support = +3 MW

Cooling at PUE 1.4 = +5.2 MW

Total facility demand: ~18 MW continuous for 4 months

That's 52,000 MWh for one training run. At typical industrial electricity rates of $0.08/kWh, that's $4.16 million in electricity alone. But they didn't train it once. They probably ran 50+ experiments. There's $200 million in electricity before you even serve your first customer.

Inference Energy Profile (The Part Everyone Underestimates):

Query processing: 0.1-1 kWh per million tokens

Global query volume: 100 billion+ queries/day across all LLMs

Latency requirements: <100ms response time

Geographic distribution: Needed everywhere

Redundancy requirements: 99.99% uptime

Inference scales with success. By 2030, if AI adoption follows internet adoption trajectories:

10 billion daily active AI users

1,000 queries per user per day average

10 trillion queries daily

At 0.5 kWh per 1M tokens average

= 50 GW of continuous inference load

The Thermodynamics of Geographical Advantage

But raw electricity cost tells only part of the story. The total energy cost of computation includes cooling overhead, measured by Power Usage Effectiveness (PUE). Traditional datacenters in temperate climates achieve PUE ratios of 1.1 to 1.2, meaning 10-20% additional energy for cooling. This assumption breaks down entirely in tropical environments, where ambient temperatures regularly exceed 35°C. The conventional wisdom suggests this makes Africa unsuitable for large-scale compute infrastructure.

The conventional wisdom, as we shall see, is catastrophically wrong.

When ambient temperatures exceed 27°C, air cooling becomes physically constrained by the Carnot efficiency limit. The coefficient of performance (COP) for cooling systems drops precipitously as the temperature differential between desired chip temperature and ambient air increases. At 35°C ambient, maintaining chip temperatures below 70°C requires moving heat against a 35°C gradient, compared to just 15°C in Nordic datacenters. This would seem to doom tropical compute infrastructure to permanent disadvantage.

Yet this analysis commits a fundamental error: it assumes we must use the same cooling architectures developed for temperate climates. When forced to engineer for 35°C ambient temperatures from first principles, entirely different optimal solutions emerge. Direct liquid cooling, mandatory in these conditions, achieves superior heat transfer coefficients compared to air cooling—approximately 3,000 W/m²K for water versus 25 W/m²K for air. Immersion cooling in dielectric fluids pushes this even further, enabling heat removal at densities exceeding 100 kW per rack while maintaining lower total system PUE than air-cooled facilities.

The physics of phase-change cooling opens even more dramatic possibilities. Evaporative cooling systems designed for African climates can achieve effective PUE ratios below 1.05 in coastal regions where seawater provides unlimited cooling capacity. The latent heat of vaporization for water (2,257 kJ/kg) means that evaporating just one liter of water can remove 627 watt-hours of heat—enough to cool a high-performance GPU for over two hours.

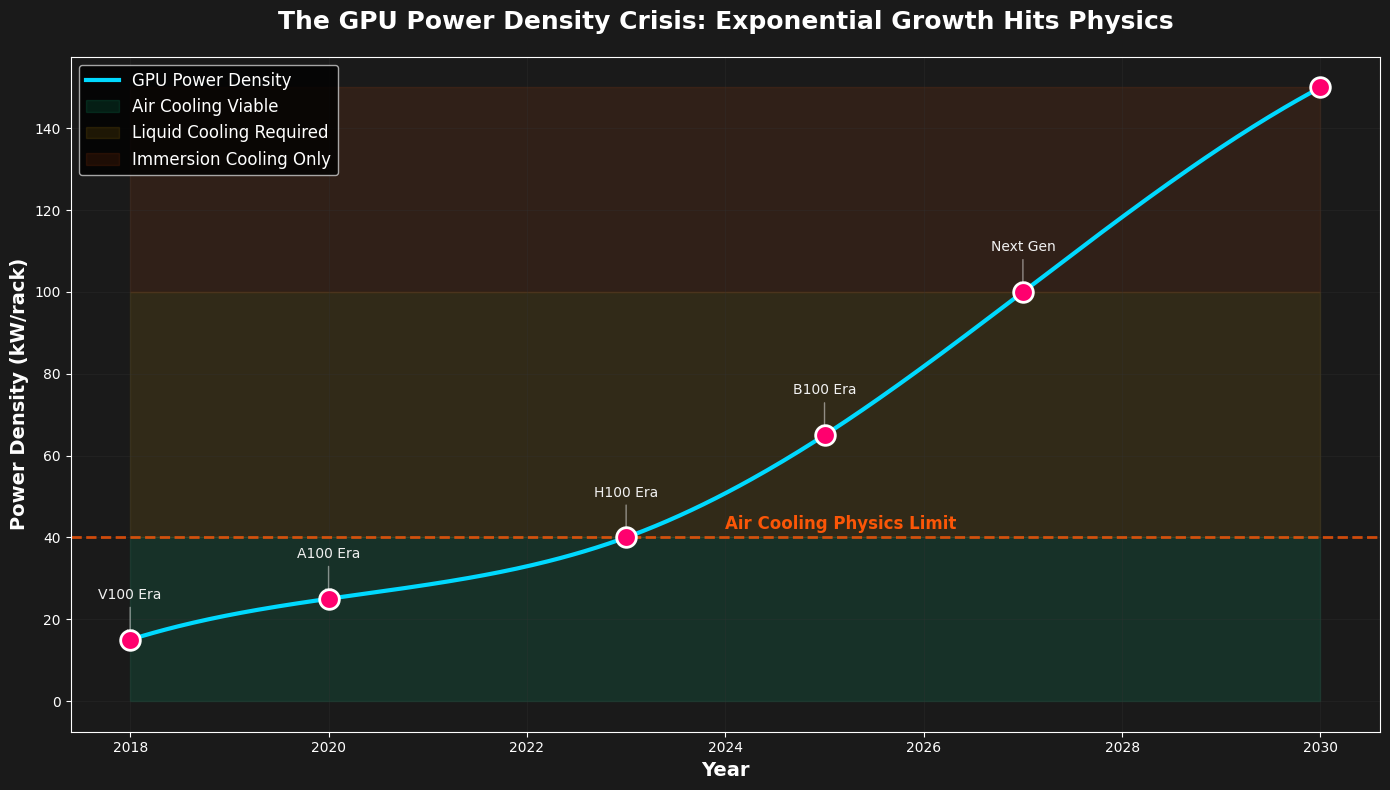

Power Density Evolution: The Physics Problem

Traditional datacenters were designed for 5-7 kW per rack. Email servers, web hosting, basic compute. Then came AI:

The GPU Power Density Explosion:

2018: 15 kW/rack (V100 era)

2020: 25 kW/rack (A100 era)

2023: 40 kW/rack (H100 era)

2025: 60-80 kW/rack (B100/next gen)

2027 projection: 100+ kW/rack

You can't just retrofit existing facilities. At 40 kW/rack, air cooling becomes a physics problem, not an engineering problem. You're trying to remove the heat equivalent of 40 hair dryers running continuously in a space the size of a refrigerator.

In Africa, where ambient temperatures regularly hit 40°C, you're forced into advanced cooling from day one. That's not a disadvantage—it's a competitive moat. You can't half-ass cooling in Nairobi like you can in Dublin.

The Continental Arbitrage Opportunity

Consider the thermodynamic advantages of specific African locations:

East African Coast (Mombasa, Dar es Salaam):

Indian Ocean maintains 25-28°C at accessible depths

Year-round free cooling with 3-5°C approach temperatures

Unlimited seawater for cooling

Compare: Silicon Valley hits 40°C with water scarcity

Ethiopian Highlands (Addis Ababa):

2,355 meters elevation = 15-20°C cooler than sea level

Intense equatorial solar radiation

Reduced atmospheric pressure (75-80 kPa) improves phase-change cooling

Sits atop geothermal baseload resources

Sahel Solar Belt:

2,500-3,000 kWh/m²/year direct normal irradiance

Compare: Germany gets 1,000-1,500 kWh/m²/year

Same solar panel produces 2.5x more lifetime energy

Construction costs differ by <20%

The fundamental thermodynamic equation governing datacenter efficiency:

Total Energy Cost = (Computational Energy × PUE) × (Energy Price per kWh)

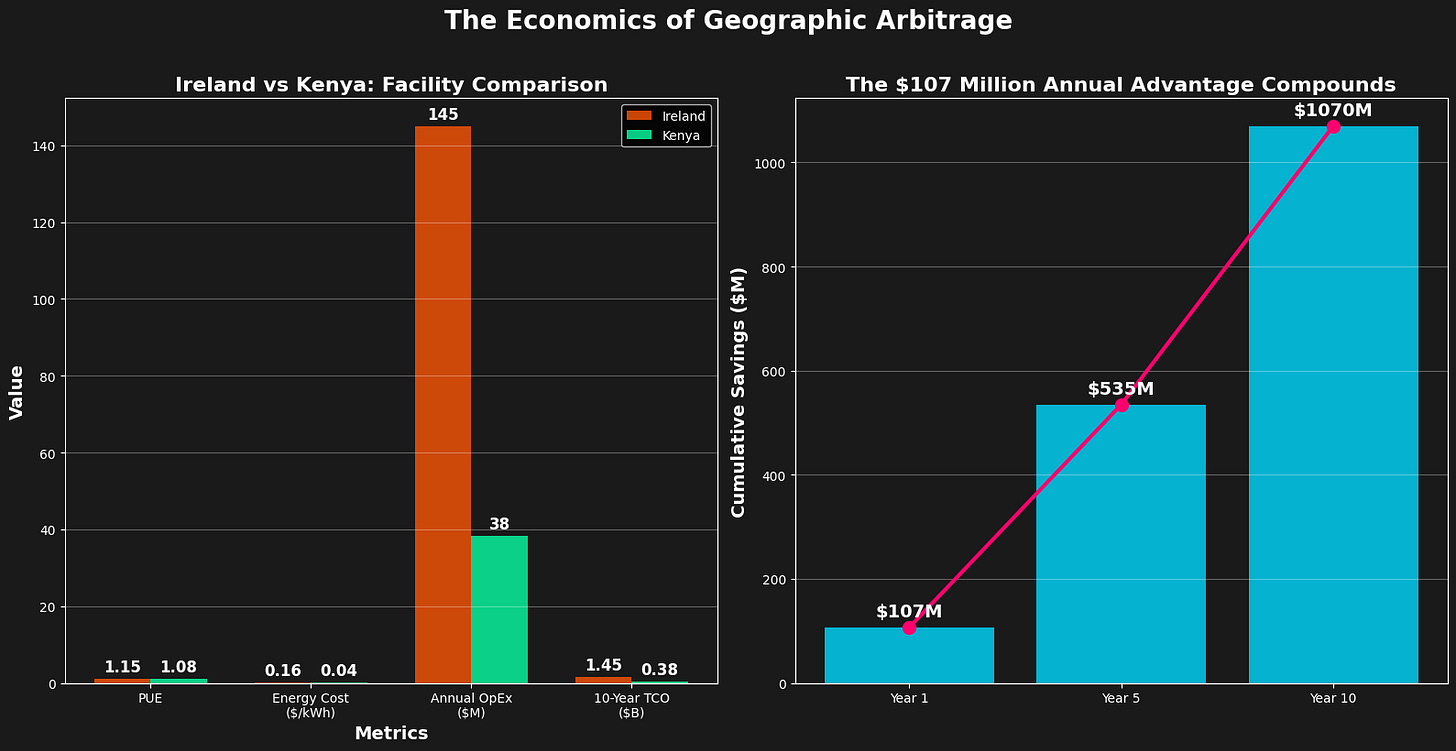

Let me show you how this plays out with real numbers:

100 MW AI Training Facility - Ireland:

PUE: 1.15 (excellent by industry standards)

Energy cost: €0.15/kWh ($0.16/kWh)

Total cost per computational kWh: $0.184

Annual energy cost at 90% utilization: $145 million

Same Facility - Kenya (Engineered for Climate):

PUE: 1.08 (liquid cooling mandatory, geothermal baseload)

Energy cost: $0.045/kWh (geothermal + solar blend)

Total cost per computational kWh: $0.0486

Annual energy cost at 90% utilization: $38.3 million

That's $107 million in annual operating cost advantage. For one facility. The global AI infrastructure buildout needs thousands.

Grid Stability and the Off-Grid Advantage

AI workloads aren't like traditional compute. They're sustained, intensive, and synchronized. When 10,000 GPUs kick into high gear simultaneously:

Instantaneous Load Changes:

0 to 10 MW in <100 milliseconds

Harmonic distortion from switching power supplies

Power factor correction requirements

Voltage stability within ±2%

Traditional grids can't handle this. California is installing 100 MWh battery farms just to buffer AI training loads. That's like buying a swimming pool because your bathtub overflows. Just... fix the bathtub? Or better yet, move somewhere with working plumbing. Most African grids can't provide this either. But that's exactly why off-grid makes sense—you engineer the solution from scratch instead of trying to retrofit 50-year-old infrastructure.

The intermittency challenge of solar power, often cited as disqualifying for datacenter use, becomes manageable when properly understood in the context of AI workloads. Training runs can checkpoint progress and tolerate interruptions. A training cluster can operate at 100% capacity during peak solar hours, reduce to 50% overnight on stored energy or backup generation, and resume full capacity at sunrise. This load-following approach, impossible with traditional datacenter workloads, aligns perfectly with renewable generation profiles.

At this point, you're either convinced or you think I've lost it. Fair. But before you close this tab, let me show you what's already happening while we're arguing about it:

The 2030 Reality Check

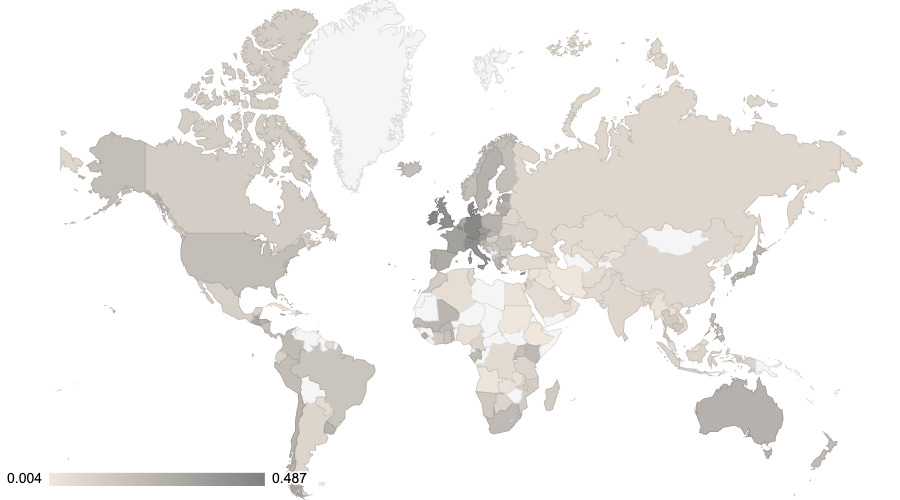

Current global AI compute distribution:

United States: 45% (concentrated in Virginia, Oregon, Texas)

China: 25% (older hardware, sanctions-constrained)

Europe: 15% (energy-cost challenged)

Rest of World: 15% (Africa <1%)

Projected new capacity needs by 2030:

Conservative: 30-50 GW ($300-500 billion investment)

Moderate: 100-150 GW ($1-1.5 trillion investment)

Aggressive: 300-400 GW ($3-4 trillion investment)

Current global capacity addition rate: 20 GW annually Required rate: 10-50x current

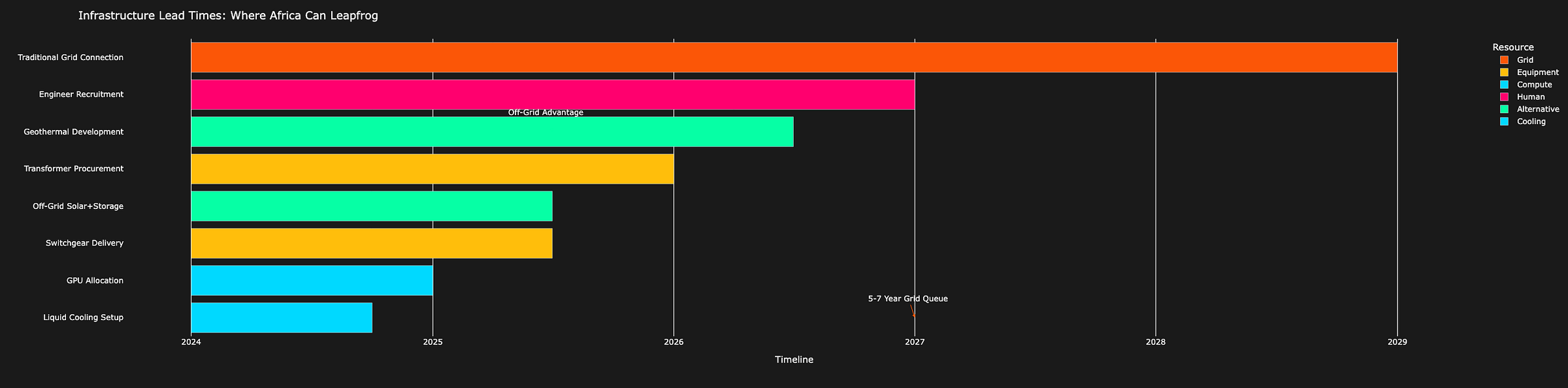

The bottlenecks preventing traditional locations from scaling:

Transformer Manufacturing: 2-year lead times, 3 global suppliers

High-Voltage Switchgear: 18-month lead times

GPU Production: TSMC constrained at 3nm/5nm nodes

Skilled Engineers: 500,000 shortage globally

Grid Interconnection: 5-7 year queues in developed markets

The Thermodynamic Inevitability

This brings us to the crucial insight: Africa's supposed disadvantages in ambient temperature force engineering solutions that ultimately prove superior to temperate-climate approaches. Mandatory liquid cooling, renewable-grid integration, and waste-heat utilization create systemic efficiencies impossible in locations where air cooling and grid power remain viable. The constraint becomes the catalyst for innovation.

I know this sounds like I'm trying to sell you beachfront property in the Sahara. But here's the thing: the Sahara has something California doesn't – unlimited free cooling capacity if you're not too proud to use it.

The thermodynamics of computation in tropical climates also opens possibilities for waste heat utilization absent in traditional datacenters. High-grade waste heat (60-80°C) from liquid-cooled systems can drive absorption chillers, desalination plants, or agricultural drying operations. A 100 MW datacenter rejecting heat at 70°C could desalinate 50,000 cubic meters of water daily using multi-effect distillation—enough for 250,000 people. In water-scarce regions, the datacenter becomes a water producer rather than consumer.

The geographic distribution of global compute infrastructure reflects historical accidents rather than physical optimization. Virginia hosts massive datacenter clusters not due to thermodynamic advantages but because of proximity to government agencies and network interconnection points established decades ago. This path dependence now costs the industry billions annually in suboptimal energy expenses and constrains growth as grid capacity reaches physical limits.

Look, I get it. You've got a nice office in Palo Alto. Your VCs are down the street. Your favorite overpriced coffee is walking distance. Moving compute to Africa sounds like something a crazy person suggests at 3am after too much Red Bull.

I am that crazy person. It is 3am. But the math doesn't care what time it is.

In Summary

Everyone's treating AI infrastructure like it's just bigger datacenters. It's not. It's a fundamental reimagining of energy infrastructure at planetary scale. And the winners won't be who you expect.

The hyperscalers know this. Microsoft is exploring deals in Kenya. Amazon is evaluating Morocco. Chinese firms are establishing positions in Egypt. They're not doing this for corporate social responsibility. They're following the thermodynamic gradient to its logical conclusion.

The question is not whether Africa can compete in AI infrastructure, but whether existing compute locations can maintain competitiveness as thermodynamic realities assert themselves. Energy costs compose 40-60% of total datacenter operating expenses. A 3-4x disadvantage in energy costs compounds annually, eventually overwhelming any initial advantages in network connectivity or ecosystem density.

The migration of energy-intensive industries to regions of energy abundance represents a historical constant, from aluminum smelting to cryptocurrency mining. AI infrastructure will follow the same inexorable logic. The only question is who moves first and captures the advantage.

This thermodynamic analysis establishes the foundational physics that governs competitive advantage in AI infrastructure. But converting theoretical advantage into practical reality requires understanding the full stack of dependencies—from mineral extraction to human capital—that determine success in the computational economy. The energy advantage means nothing without the ability to execute infrastructure at scale.

End Note

Still here? Good. That means you're either running an AI infrastructure fund, engineering at a hyperscaler, or you're my mom (hi mom).

Physics doesn't negotiate. Thermodynamics doesn't care about your venture fund's thesis. And energy costs compound relentlessly, quarter after quarter, until geographic advantage becomes geographic inevitability.

Want to argue? Bring spreadsheets.

Disclaimer

I don't know shit about datacenters.

There, I said it. Never built one. Never worked in one. My understanding of cooling systems comes from YouTube University and pestering engineers until they explain things like I'm five.

All models are wrong; some are useful. Ours at least show their work.

All calculations assume physics continues to work as advertised. If thermodynamics stops functioning (or get disrupted by a YC startup we haven't heard about yet), please disregard this analysis and panic accordingly.

If energy becomes free, or if someone invents room-temperature superconductors that actually work, please disregard everything above.

If you actually build datacenters and you're angry right now, good. Email me. Tell me exactly how wrong I am. I'll update the analysis and credit you. This is basically peer review by public humiliation and I'm weirdly okay with that.

Or don't. I'll keep publishing these things anyway. Someone needs to do the uncomfortable math, even if that someone failed thermodynamics twice and learned Excel from YouTube.

Data Sources: Public filings, engineering first principles from textbooks I half-understood, manufacturer spec sheets (which are optimistic), field performance data (which is depressing), and that one guy who definitely doesn't work at a major hyperscaler who definitely didn't confirm our estimates over drinks.

My math is probably wrong. Please check it. I'm serious. Half these calculations I had to derive from first principles because nobody publishes the real numbers. The other half I got from engineers who made me promise not to name them.

The ±15% margin of error on our projections assumes rational behavior. If humans are involved, double it. If I am one of those humans, triple it. If committees are involved, abandon hope.

We have no conflicts of interest unless you count an unreasonable attachment to arithmetic and a stubborn belief that energy is a physical commodity, not a software abstraction. If you think you can virtualize thermodynamics, disrupt physics, or make electrons obey Moore's Law, this analysis will hurt your feelings. We're not sorry.

Reproduction encouraged but cite your sources. We spent too many nights calculating cooling coefficients and cross-referencing energy tariffs to let someone else take credit. Plus, when this thesis goes mainstream in 18 months, we want receipts.

Some confessions:

Learned about "duck curves" from a meme. Still my primary source.

Had to watch three different videos to understand immersion cooling

My thermodynamics knowledge peaked in 2015 and it wasn't great then

Excel is held together with prayers and circular references

Pretty sure I reinvented some basic engineering principles because I didn't know the terms to Google

Maybe I'm talking my own book. After six months down this rabbit hole, I'm convinced someone's going to make stupid money building AI infrastructure in Africa. Might even be dumb enough to try it myself once I figure out what a "transformer substation" actually does.

Welcome to the support group. We're all imposters here.

But at least our spreadsheets balance.

(Usually.)

Not Financial, Legal, or Career Advice. Obviously.

Nothing in this analysis constitutes financial, legal, or career advice. If you're making billion-dollar infrastructure decisions based on some guy's thermodynamics blog post, that's between you and your portfolio. This is not legal advice either—I'm an engineer who can't even spell "indemnification" without autocorrect. This is not investment advice. I am not your advisor. I'm just someone with too many spreadsheets and too much caffeine.

These are my opinions. Mine. Nobody else's. This does not reflect the official position of any company, organization, or sentient AI. Any resemblance to corporate strategy, living or dead, is purely coincidental. They especially don't reflect the views of any employer past, present, or future. My employer doesn't even know I write this stuff at 2am.

Full Disclosure: The author may have interests in renewable energy projects and companies. I'm basically emotionally invested in anything that makes electrons cheaper. Might even be dumb enough to put money where my spreadsheets are.